Update: Please keep in mind that the native XGBoost implementation and the implementation of the sklearn wrapper for XGBoost use a different ordering of the arguments. The correct implementation is as follows: def gradient_se(y_pred, y_true): For the hessian it doesn't make a difference since the hessian should return 2 for all x values and you've done that correctly.įor the gradient_se function you've incorrect signs for y_true and y_pred.

You pass the y_true and y_pred values in reversed order in your custom_se(y_true, y_pred) function to both the gradient_se and hessian_se functions.

A simplified version of MSE used asĪccording to the documentation, the library passes the predicted values ( y_pred in your case) and the ground truth values ( y_true in your case) in this order.

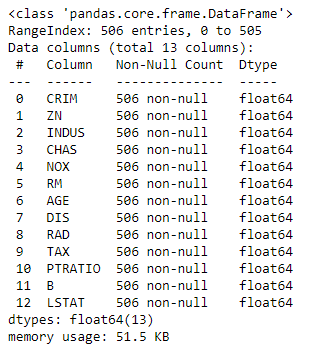

Python xgbregressor objective code code#

The original code without a custom function is: import xgboost as xgbĪnd my custom objective function for MSE is as follows: def gradient_se(y_true, y_pred): If you all could look at this an maybe provide insight into where I am wrong, that would be awesome! I don't know what I'm doing wrong or how that could be possible if I am computing things correctly. The problem is, the results of the model (the error outputs are close but not identical for the most part (and way off for some points). My differential equation knowledge is pretty rusty so I've created a custom obj function with a gradient and hessian that models the mean squared error function that is ran as the default objective function in XGBRegressor to make sure that I am doing all of this correctly. So I am relatively new to the ML/AI game in python, and I'm currently working on a problem surrounding the implementation of a custom objective function for XGBoost.

0 kommentar(er)

0 kommentar(er)